Engineers at Twitter, Paypal have joined Facebook in offering a look under the hood of the massive MySQL deployments that drive their web services.

Database and application engineers at both of the web giants provided intimate details of how they use MySQL open source relational databases to run globally-distributed, highly available and consistent web applications.

The move echoes a Facebook webcast in late 2011 that revealed some mind-blowing stats on the volume and response times of queries from a customer base growing close to one billion active users.

The engineers used sessions at this week's MySQL Connect in San Francisco conference to dispute commonly-held assertions around the limitations of relational database technology to solve what is commonly referred to as 'Big Data' problems.

'Big Data' is a purported condition in which an organisation is unable to process the growing volume of data captured in narrowing periods of time by globally-distributed web applications.

Twitter's MySQL

By the numbers:

- Over 140 million active users

- 4629 tweets per second (25,000 at peak)

- Three million new rows created per day

- 400 million tweets per day, replicated four times

Jeremy Cole, database administration team manager at Twitter, told attendees that the micro-blogging network uses a commercial instance of MySQL because there are "some features we desperately need to manage the scale we have and to respond to problems in production".

Cole revealed that Twitter's MySQL database handles some huge numbers — three million new rows per day, the storage of 400 million tweets per day replicated four times over — but it is managed by a team of only six full-time administrators and a sole MySQL developer.

"I am often asked, why does Twitter use MySQL when there are NoSQL solutions out there that would solve our problems?" he said.

"The answer is we know MySQL. It is operable, we know how to upgrade and downgrade it, we know how to fix bugs and put out new releases. We know exactly how it works internally, we have the source code.

"It is also high performance. Most of the fleet runs 10,000 to 50,000 queries per second on every server."

Cole said the assumption that NoSQL databases are "so much faster than MySQL isn't true".

Twitter uses MySQL as a "building block," he said, "as a core of features we understand and functionality we trust", upon which his team uses Gizzard for sharding and replication, InnoDB as its storage system, and a NoSQL database called FlockDB.

Twitters' large Gizzard clusters, which the devs have codenamed 'T-Bird', distribute the storage of Twitter's 400 million tweets per day across 50 machines.

The FlockDB system — codenamed TFlock and built on top of MySQL — is a "source to destination ID distributed hash map".

In plain English, it maps the relationships between Twitter users, lists timelines for users (all by user and Tweet ID) and counts of relationships (how many times you tweeted, how many times re-tweeted).

"MySQL in of itself is not a purpose-built key-value store ± it does work, you may issue thousands of queries to server to get your results back," Cole explained.

Paypal's MySQL

By the numbers:

- Over 100 million active users.

- 256-byte reads in under 10 miliseconds.

- Global replication of writes in under 350 miliseconds for a single 32-bit integer.

- Runs on Amazon Web Services in US, Japan and European data centres.

Daniel Austin, a technology architect at Paypal, has built a globally-distributed database with 100 terabytes of user-related data, also based on a MySQL cluster.

Austin said he was charged with building a system with 99.999 percent availability, without any loss of data, an ability to support transactions (and roll them back), and an ability to write data to the database and read it anywhere else in the world in under one second.

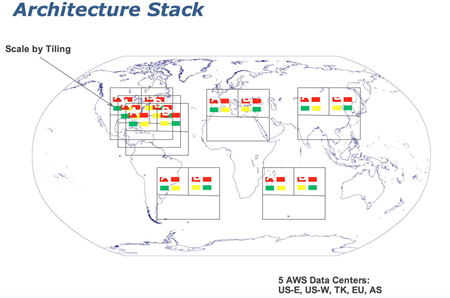

Paypal hosts this globally distributed MySQL system using Amazon Web Services' data centres on both coasts of the United States, two in Asia and another in Europe.

The system has been designed to cater for PayPal's growth and for high availability.

Austin said that in each availability zone, Paypal builds out 'tiled' configurations of two extra large data nodes for every small MySQL query and management node. The web giant brings on more boxes as required, using the same ratio so the system can "scale to an arbitrarily larger amount of users over time," he said.

A replica of each 'tile' is housed within each Amazon availability zone. But for true high-availability, PayPal's engineers need to ensure there are no single points of failure.

Source: Paypal

As such, they designed was a "peer-based replication" system which operates in a circular motion which will see each node in a cluster fall on to each successive node.

In a configuration of four globally-distributed clusters, for example, Node A will fail over to Node B, B to C, C to D, and D back to A.

If Node C drops out, the pattern changes. If Node D doesn't hear from Node C within a given period of time, it will hit Node B instead and the system "heals itself" with three nodes until the fourth is back online.

"We tested this — and a lot of people were very skeptical," Austin said.

"But it works better than with master and slave, it heals itself faster, its easier to bring nodes in and out. Using this design, all the systems have the same data give or take a few fields that haven't synched yet. It makes good sense for a globally distributed system."

The only minor complication — which he learned from day one — was that "you have to do it in a one direction", as you'll soon get a timestamp error if one node attempts to read data that was written by another node in the future (due to data moving across datelines).

Austin conceded that using MySQL clusters in Amazon's cloud has its cons.

MySQL has some size constraints for data nodes (two terabytes) and AWS has network speed limits (250 Mbps). While AWS is "cheap" and "looks easy" for a single server, there are "challenges to making it work in the real world as a large scale system," he said.

Builders of globally-distributed systems on the Amazon cloud have noted (when attempting to resolve an IP address) that availability zones "aren't entirely separate", for example, and neither are they consistent from one zone to another.

"The [availability zones] aren't Barbie Dolls," Austin said.

By the (2011) numbers:

- Over 950 million active users

- Rows read per second: 450 million (at peak)

- Queries per second: 13 million (at peak)

- Query response times: 4ms reads, 5ms writes

- Rows changed per second: 3.5 million (at peak)

Facebook hasn't updated the industry on its database technology since it went public earlier this year. But even its 2011 performance is a benchmark.

But as of late 2011, the company employed three database teams working on its MySQL cluster — an engineering group responsible for long-term strategy, a performance group that looks at day-to-day changes, and an operations group that troubleshoots database issues on close to a real-time basis.

Facebook's customer base — now at a billion users — create something like 13 million database queries per second (at peak), and the social networking giant has tuned its systems to read in under four miliseconds and write in five miliseconds.

The company's database engineers have argued that chasing the highest number of queries per second (QPS) isn't the end game.

"The performance community can be derailed by chasing the highest QPS," Facebook database engineer Mark Callaghan told attendees at Facebook's tech talk last year.

"It's ok if a query is slow, so long as it's always slow. It's when queries are fast at one time, and slow another, that leads to unhappy users."

Next chapters

The next chapter for the world's largest social networks is to build better monitoring tools.

Callaghan said in late 2011 that Facebook was interested in "sub-second level monitoring" — catching out and sometimes killing queries that stall for only milliseconds — in order to further tune the performance of the social network.

Cole said Twitter is also looking into 'high frequency" monitoring tools to pick up flaws in its system that today go unnoticed.

"MySQL doesn't provide tools for tracking performance in milli-seconds," Cole explained.

"Today we can see a 60-second stall, but we'd like to track down one or two or three second stalls."

Correction (06/10/12): The original story stated that PayPal has over 17m users. PayPal in fact has over 100m users, and over 17m mobile users. iTnews apologises for this error.